Word2Brain2Image: Visual Reconstruction from Spoken Word Representations

Abstract

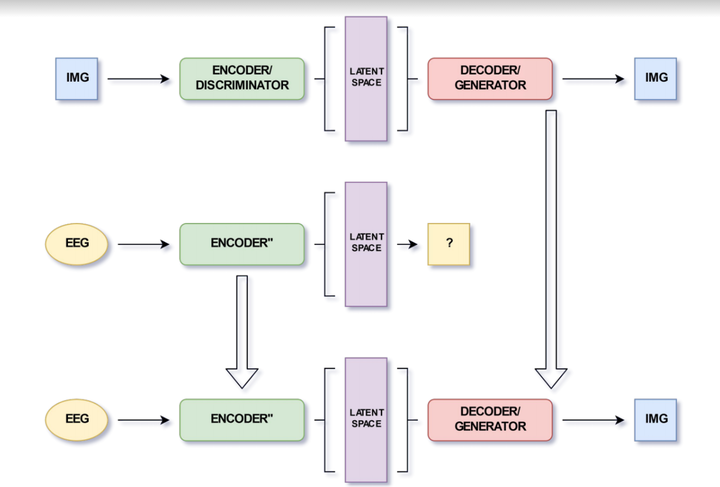

Recent work in cognitive neuroscience has aimed to better understand how the brain responds to external stimuli. Extensive study is being done to gauge the involvement of various regions of the brain in the processing of external stimuli. A study by Ostarek et al. has produced experimental evidence of the involvement of low-level visual representations in spoken word processing, using Continuous Flash Suppression (CFS). For example, hearing the word ‘car’ induces a visual representation of a car in extrastriate areas of the visual cortex that seems to have a spatial resolution of some kind. Though the structure of these areas of the brain has been extensively studied, research hasn’t really delved into the functional aspects. In this work, we aim to take this a step further by experimenting with generative models such as Variational Autoencoders (VAEs) (Kingma et al 2013) and Generative Adversarial Networks (GANs) (Goodfellow et al. 2014) to generate images purely from the EEG signals induced by listening to spoken words of objects.